DePaul Online Research Library

One of the really nice perks that we have as students at DePaul is the online research library. The library subscribes to many databases of academic journals and magazines which are searchable. Many of these databases allow you to access and download full text versions of the journal articles, usually available in PDF format. To access the online research library, all you need is your Depaul student ID.

Another really nice feature of the DePaul online research library is that it can be integrated with the Google Scholar search engine. I'll show you how to do that in a future blog post.

Here's how to access the DePaul online research library:

2. At the top of the page, click on the "Libraries" link.

3. This will bring you to the Library page. There are lots of links to follow here. Focus on the "Research" section. The way this works is that you need to identify the database in which you want to conduct your search. Once you do that, you can use the database's internal search function to find your article. So, how do you find a database? There are a couple ways:

Use this method if you're just starting out and don't know which database or journal you're going to search

4. Click the "Journals and newspaper articles" link. That will bring you to the subject page.

5. Since we're studying statistics, a good choice for subject would be Mathematical Sciences. Click that link.

6. You get the database list. For mathematical sciences, we subscribe to 7 databases. The database list gives you a short description of the database and the dates covered by the database. Some of the databases indicate whether we subscribe to full text of articles with a FT icon:

Method 2:

Use this method if you know the database you want to search

4. In the Research section of the Library page you can click on the A-Z Database List to see all the databases. If you already know the database that you want to search, you can skip by the "subject" steps 4-5 above in method 1 by just using the A-Z list.

Method 3:

Use this method if you know the name of the journal that you want to search

4. Click the "Journals and newspaper articles" link.

5. On the left hand margin, enter the name of the journal and click Search.

6. The results page will show you which databases contain that journal and for which years

Each database has its own interface and it would be impossible for me to cover all of them, but most of them are self explanatory and user-friendly. You can usually search by author, article title or keyword. Several databases also allow you to browse the issues of the journals in the database.

More to come...

Thursday, February 28, 2008

Using the DePaul Online Library for Research

Posted by Eliezer at Thursday, February 28, 2008 0 comments

Tags: Fun stuff

Wednesday, February 20, 2008

Exit Polls

Having gone over the concept of sample size, it's interesting to turn to one of the most well-known samplings: election exit polls. Who does these exit polls and how are they performed?

Having gone over the concept of sample size, it's interesting to turn to one of the most well-known samplings: election exit polls. Who does these exit polls and how are they performed?

As it turns out, ABC, Associated Press, CBS, CNN, Fox and NBC formed a consortium in 2003 called the National Election Pool (NEP). NEP then contracted with Edison/Mitofsky to conduct exit polls across the country and provide analysis and projections.

According to the latest news on the Edison/Mitofsky site, they sampled 2300 voters (1442 democrats and 880 republicans) in yesterday's Wisconsin primary. You can find the details of all the Wisconsin exit poll questions at CNN's web site.

The Milwaukee Journal Sentinal reports the details of the actual vote count broken down by county and congressional district for both the Democratic and Republican primaries. The total number of reported voters is about 1,515,630 (1,108,119 democrats and 407,511 republican) .

On the democratic side, the exit polls showed Obama had 57.1% of the votes and Clinton had 42.0%. In the final tally, Obama beat Clinton 58% to 41%. It turns out that the exit polls were accurate within about 1% or 15,000 votes.

Posted by Eliezer at Wednesday, February 20, 2008 0 comments

Tags: Fun stuff

Tuesday, February 19, 2008

Graphing the Normal Distribution Curve with Excel

I know... You're thinking to yourself: How does he make such awesome normal distribution curves for those illustrations on this blog? He can't be drawing them by hand! Well, I learned the basics from Gary Andrus's web page, and then I modified his method to make it more general and a bit faster.

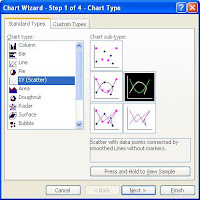

Here's how I do it using MS Excel 2003 (and I'll even throw in a few nice Excel tricks too):

Step 2: In cell B1 enter =NORMDIST(A1,0,1,0). This tells Excel to calculate the value of the normal distribution PDF for the value in A1 (which is -4) for a normal distribution with mean of 0 and standard deviation 1. I.e. the standard normal distribution. You can fiddle with those values if you want a different normal distribution. The last 0 tells it to use the probability distribution function, not the cumulative distribution function (you overachievers can try using 1 as your last value for extra credit).

Step 4: I usually make it look a little bit nice by right-clicking the x-axis, selecting Format Axis, and in the Scale tab changing the Minimum and Maximum to -4 and 4 respectively. I also right-click the graph line itself, select Format Data Series, and in the Patterns tab I give the line a heavier Weight than the default so it stands out better.

Posted by Eliezer at Tuesday, February 19, 2008 3 comments

Tags: Fun stuff

Thursday, February 14, 2008

Current Employment Statistics from the Bureau of Labor Statistics

What interested me was the "Technical Note" in the report that related to sampling error. This relates directly to what we learned about confidence intervals in Chapter 8.

First, you need to know how the data is gathered. The BLS uses data from two surveys - the Current Population Survey and the Current Employment Survey. The CPS, also called the household data, is a sample of 60,000 households. The CES, also called the establishment data, is a sampling of the payroll records 160,000 businesses, which constitutes about 1/3 of all the non-farm workers. Those are two honkin' large sample sets! Each is a snapshot in time on the 12th of the month.

The BLS uses these data to calculate the size of the overall labor force and unemployment rate from the household data. The establishment data is used to estimate the number people employed in industry sectors such as construction, manufacturing, retail, education and government. They also calculate the average hourly and weekly wages each month.

The part that's relevant to our class is that this is a case of a estimating a population based on a sample. Therefore, when they say that the unemployment rate is 4.9% is some confidence interval around that number. Here's what the Employment Situation report Technical Note has to say about their sampling error:

When a sample rather than the entire population is surveyed, there is a chance that the sample estimates may differ from the “true” population values they represent. The exact difference, or sampling error, varies depending on the particular sample selected, and this variability is measured by the standard error of the estimate. There is about a 90-percent chance, or level of confidence, that an estimate based on a sample will differ by no more than 1.6 standard errors from the “true” population value because of sampling error.The 90% confidence interval for the total employment numbers from the household data is +/- 430,000 and for the establishment data it's +/- 104,000. Here are the numbers from the establishment data for total (non-farm) employment in the latest report which includes the data from Nov and Dec 07:

Nov 07 138,037,000

Dec 07 138,119,000

Jan 07 138,102,000

As you can see, all three numbers are within the confidence interval of the statistic, so we can't really say that total employment went up or down in these three months.

You can view the latest report here: The Employment Situation

I find it hilarious that they use a dinosaur icon for the links to historical data!

I find it hilarious that they use a dinosaur icon for the links to historical data!

Posted by Eliezer at Thursday, February 14, 2008 0 comments

Tags: Fun stuff

Monday, February 11, 2008

Raw Baseball Data

Thanks to Nathan Yau at FlowingData, I found a link to a site with raw baseball data and stats at Baseball-Databank.org. The files come in csv or mySQL format. If you're not running a mySQL database, the csv files can be easily imported into Excel using the Data-Import External Data menu.

Thanks to Nathan Yau at FlowingData, I found a link to a site with raw baseball data and stats at Baseball-Databank.org. The files come in csv or mySQL format. If you're not running a mySQL database, the csv files can be easily imported into Excel using the Data-Import External Data menu.

There was an article yesterday by Justin Wolfers on the Freakonomics column/blog at the NY Times which analyzed Roger Clemens's performance against the performance of other pitchers with a long history. The data seemed to indicate that while most pitchers' performance went down over time, Clemens's actually improved during his last few years.

The article has received quite a bit of attention and both Yau and Andrew Gelman have commented on it in their blogs. There has also been feedback from Clemens's PR firm. Interesting reading!

[UPDATE] Justin Wolfers has provided a follow-up article on the Freakonomics blog with a "step-by-step" guide to his analysis. I don't know enough (yet!) about regressions and things like r-squared values to make comments on the analysis, but this seems like a great practical example for us to work through in Chapter 12.

Posted by Eliezer at Monday, February 11, 2008 0 comments

Tags: Fun stuff

Thursday, February 7, 2008

Voter Registration and Turnout

Election Data

With the Super Tuesday primaries this past Tuesday, I decided to take a brief look at whatever voting data I could find. I found the Election Assistance Commission web site with data from several past years' elections. I grabbed the 2002 registration and turnout data by state. I'd love to get some more recent data. If you know of a source, please email me or leave a comment.

My initial hypothesis was that registration and turnout should have a pretty strong correlation since, after all, you can't vote if you're not registered. On the other hand, I know that there are some pretty strong voter registration drives with multiple venues for registering, while actual voting is quite controlled and limited in the number of locations where a person can vote. Registration can be highly influenced by outside factors (such as availability and ease of access) while voting itself often needs to be self-motivated.

Here's a plot of the % Register vs. % Turnout by state for the 2002 election:

The coefficient of correlation is 0.382.

Breakdown by Region

Then I thought that perhaps the correlation may be culturally influenced. Could it be that in the northeast states, registration is more closely correlated with voter turnout while in another region the relationship is not as close?

I used the 4 major regions of the US (Northeast, Midwest, South and West) as defined by the US Census Regions and Divisions, and used Minitab to create the plot below:

The regions are:

Northeast 1

Midwest 2

South 3

West 4

I hope to compare the basic descriptive statistics for each region as well as the correlations soon. I do notice some clustering of the data points on cursory inspection.

[Edit] Correlation coefficients by region are:

Northeast: 0.676

Midwest: -0.338

South: 0.063

West: 0.665

How interesting! In the Northeast and West regions, voter turnout is relatively well correlated with registration, while in the South there is little correlation and in the Midwest it's negative! I think a logical next step is to normalize for the total population of each region.

All just food for thought while I wait to get back into the coursework tonight...

Posted by Eliezer at Thursday, February 07, 2008 0 comments

Tags: Fun stuff

Tuesday, February 5, 2008

Numb3rs and Monty Hall

Ok, I know I'm way way behind the curve on this, but I'm just starting to get into the show Numb3rs. Mostly because I hardly watch any TV and secondly, the show is on Friday nights when I don't watch any.

The DVDs of the show are hard to get from Blockbuster online, but I did manage to get the last disk of season 1, which had just one episode plus some bonus material. I watched the one episode, Man Hunt, last night and it threw a lot of math and statistics at us. Bayesian Analysis, Markov processes and, most importantly: the Monty Hall Problem! Charlie's explanation of the answer was too short and not all that convincing. In fact, I think it's inaccurate because the problem was misstated. In the real Monty Hall problem, the fact that the host will always give the choice to switch after the initial selection is made is stated at the beginning - and that makes a big difference. But I was thrilled that the problem made it to prime time anyway.

BTW, the bonus material includes an interview with Gary Lorden, chairman of the mathematics department at CalTech and math consultant to the show, as well as the two creators of the show concept. There is clearly a deep passion in these people for the teaching of math and they've done a good job with this show of getting some fundamental ideas out to the public.

IMHO, the best part of the bonus material is when Gary Lorden explains that part of what they were trying to demonstrate on the show is that even for smart people, math is hard. It's something that you work at. Charlie spends hours at the board working on his theories before he comes up with something. And he doesn't always get it right the first time. He's persistent though and he keeps trying until he comes up with a theory that fits the evidence.

The second best part of the Lorden interview was when he showed the clip where Peter McNichol, playing Dr. Larry Fleinhardt (probably meant to resemble Richard Feynman), comments that the math department is the least libidinous department on campus.

Posted by Eliezer at Tuesday, February 05, 2008 0 comments

Tags: Fun stuff

Monday, February 4, 2008

Getting ready for the Central Limit Theorem

Sampling

Since the material on sampling and the Central Limit Theorem (CLT) is more or less new to me, I started reading ahead over the weekend. After reading the lecture notes, our textbook and another text that I have (Understandable Statistics), I realize that the CLT and the whole discussion of the sampling distribution of the mean involves meta-statistics. That is, we're not examining the distribution of some random variable itself, but rather we're taking the mean of a bunch of samples of a random variable and looking at the distribution of those statistics.

Every time I think of meta-analysis, I think of the term I first read in Douglas Hofstadter's Pulitzer prize-winning magnum opus Godel, Escher, Bach: An Eternal Golden Braid - JOOTSying. JOOTS stands for "Jump Out Of The System". When you "jootsy", you leave the system itself, take a step back and look at how the system works. (BTW, that book and Hofstadter's monthly Metamagical Themas columns opened up my way of thinking to new angles and loops. It's not light reading, but it is very enjoyable. If you have a chance, you should settle down with it and learn about how the works of a great mathematician, a unique artist and a genius composer all share a common thread.)

So here, we're taking a step back and instead of analyzing the observations themselves, we're analyzing the mean of a bunch of samples and talking about what the mean of the means or the standard deviation of the means will be.

It also seems analogous to the basic idea of the first and second derivative in calculus. That is, the derivative is the slope of the curve. The second derivative is the slope of the curve defined by the first derivative. There too, we're using the same method (derivation) and applying it to the first application to get to a new level.

CLT

So what does the Central Limit Theorem say? Well, to sum it up with 3 points:

- If you have any distribution, and you take samples of size n and look at the mean of each sample, the distribution of the mean looks like the normal distribution (as you get larger n).

- The mean of the mean distribution will be close to the mean of the population

- The standard deviation of the mean distribution will be the standard deviation of the population divided by the square root of n.

Posted by Eliezer at Monday, February 04, 2008 0 comments

Tags: Fun stuff

Friday, February 1, 2008

Gapminder visualizes important statistics

I found a very cool web site for a Swedish non-profit foundation called Gapminder. The foundation is concerned with "promoting sustainable global development and achievement of the United Nations Millennium Development Goals by increased use and understanding of statistics and other information about social, economic and environmental development at local, national and global levels." [emphasis mine] They've developed an awesome way to visualize statistics through a system they call Trendalyzer. Trendalyzer "unveils the beauty of statistical time series by converting boring numbers into enjoyable, animated and interactive graphics." To really appreciate it, you need to see it in action, but I'll try to describe it:

They've developed an awesome way to visualize statistics through a system they call Trendalyzer. Trendalyzer "unveils the beauty of statistical time series by converting boring numbers into enjoyable, animated and interactive graphics." To really appreciate it, you need to see it in action, but I'll try to describe it:

They create an x-y plot of some variables, say life expectancy vs. per capita income, and plot the data for several countries around the world. Then, they want to show 2 additional variables: population and time. They visualize the population of each country by changing the plot point size from a single point to a circle proportional to the population of the corresponding country. You can see that in the snapshot included above. To show how the data change across time (time-series), they use animation with the circles move around the graph as time changes. The value of the time variable (usually the year) is shown in a watermark behind the graph. You can see that it's 2004 in the snapshot.

They have several video lectures and GapCasts that show some important trends in world issues including poverty, maternal mortality and access to public services. I highly recommend taking to the time to see these presentations. The content is eye-opening and the visual use of statistics is very original.

Posted by Eliezer at Friday, February 01, 2008 0 comments

Tags: Fun stuff

Thursday, January 31, 2008

The best team in NFL history

Both the 1972 Miami Dolphins and the 2007 New England Patriots were undefeated in the regular season. Dr. Jonathan Sackner-Bernstein, an admitted Dolphins fan, has looked at the question of which was the better team. Since, of course, they could never play each other, we can't know with certainty. But we can analyze the two teams statistically and interpret the results.

You can read the article at theheart.org, but you'll need to go through the free registration process to get to the full text. I'll summarize what I understand here:

Sackner-Bernstein's analysis involves normalizing the data from the individual game results of both teams with data from their opposing teams. I believe the purpose here is to compare Miami and New England relative to how all the teams in the league are doing. In other words, when we look at points scored, if all teams are scoring more points in 2007 than in 1972 (for whatever reason), the normalization factor will scale down the New England number of points scored to be relative to the average points scored in 1972.

Another factor in the analysis is to assess the strength of both Miami and New England relative to the teams they faced. For example, if Miami beat their opponents by a wide margin and their opponents were all relatively good teams while New England beat their opponents by a thin margin and their opponents were relatively weak, we would conclude that Miami was the better team. In order to normalize for that factor, Sackner-Bernstein multiplies the statistics for each game by the season-end winning percentage of each opponent. For example, a 20-10 win over a team that had a winning percentage of .500 would count as 10-5, whereas a 27-21 win over a team that ended the season at .333, would count as 9-7.

The analysis also calculated stats on a game-by-game basis to determine if there was a trend in either team as performing better or worse over the course of the season.

Therefore, Sackner-Bernstein first gathered data on the outcomes of all the 14 Miami games and 16 New England games. Then he gathered data on all the other teams in the league. The four statistics that he gathered were:

1. points scored

2. points against

3. total yards offense

4. total yards defense

The points and yardage differentials were also considered.

You can see the tabulation of the results in the article. I'm interested in get hold of the mean values for the league and I've contacted Sackner-Bernstein's organization to try to obtain those data. They would make a great sample exercise. I'm also trying to understanding the p-value concept. I'll have to wait for chapter 9 (pg 286) to get into that.

Sackner-Bernstein concludes:

Thus, this analysis concludes that the regular season performances of the 1972 Miami Dolphins and the 2007 New England Patriots are statistically indistinguishable in terms of dominance over their opponents in each of their respective seasons. Under the assumption that the Patriots reign victorious in this year's Super Bowl, one can only conclude that the two teams are equally dominant and should be considered the two teams tied for the best single-season performances in the NFL's modern era.

Posted by Eliezer at Thursday, January 31, 2008 0 comments

Tags: Fun stuff

Wednesday, January 30, 2008

The Birthday Problem

A relatively well-known exercise in probability is to determine the probability that two (or more) people share a birthday in a group of n people.

A relatively well-known exercise in probability is to determine the probability that two (or more) people share a birthday in a group of n people.

The answer is pretty surprising to most people. It turns out that in a group of 23 people, there's more than a 50% chance that two (or more) people have the same birthday.

The way to solve this problem is to first look at the reverse problem. I.e. what is the probability of everyone having different birthdays. Well, let's look at the people individually. The first person can have any birthday, so there are 365 possibilities for him. The second person can't have the same as the first one's bday, so there are 364 possibilities for the second person. The 363 for the third person and 362 for the fourth and so on.

Since the events (the selection of birthdays for each person) are independent, the number of successful outcomes is:

365 x 364 x 363 x ... x (365-n+1) where n is the number of people in the group

The total number of possible birthday combinations is 365^n.

P(23) = (365 x 364 x 363 x … x 343) / (365^23)

And the probability of two (or more) people having the same birthday is just the inverse:

P’(23) = 1 - P(23)

The calculation of P(23) is pretty ugly, but can be computed relatively accurately by most scientific calculators and personal computers. P(23) = 0.493, so P'(23) is 50.7%.

Taking it a step further

This is the point where most people stop, but a

1. Is there a way to simplify the formula, even with an approximation, that would make the computation quicker and easier?

2. At the same time, can we generalize the formula beyond the 365 possible birthdays and develop a way to calculate (or estimate) the probability of selecting n items all of different type from an infinite supply of items with x different types. Similarly, what is the probability of two items having the same type within a selection of n items.

It turns out that these questions have actually been studied quite a bit. In 1966, E. H. McKinney of Ball State University wrote a piece in American Mathematical Monthly (v. 73, No. 4, pp. 385-387) where he derived a formula for the problem of finding the smallest value of n such that the probability is great than or equal to 1/2 that at least r people have the same birthday.

McKinney did the computation for r = 2, 3 and 4 and reports the answers as 23, 88 and 187 respectively. What I really found interesting was the following note:

The author did not carry out the computation for r=5 since the machine time [on an IBM 7090 computer] for one computation of [the formula] for a given n was estimated at two hours.

I located the IBM history site on the 7090 Data Processing System. It's really fascinating and worth a read. But what really caught my eye was the bottom line:

The IBM 7090, which is manufactured at IBM's Poughkeepsie, N. Y. plant, sells for $2,898,000 and rents for $63,500 a month in a typical configuration.Wow! For almost $3 million, you got a computer that would take 2 hours to calculate what you could probably do in a fraction of a second today. I say "probably", because I don't totally understand McKinney's formula yet and I haven't implemented it. I'm still working on that part. :)

More on approximations of the birthday probability later...

Posted by Eliezer at Wednesday, January 30, 2008 0 comments

Tags: Fun stuff

Sunday, January 27, 2008

Learning, teaching and the Moore method

Irving Kaplansky Tonight I listened to a recording of a lecture given by the late Irving Kaplansky, who was a mathematics professor at the University of Chicago from 1945-1984. The lecture, entitled "Fun with Mathematics: Some Thoughts from Seven Decades", is a fascinating combination of personal anecdotes/memoir/autobiography and advice on how to do research and advice on how to be successful in general. The lecture is available from the Mathematical Sciences Research Institute site.

Tonight I listened to a recording of a lecture given by the late Irving Kaplansky, who was a mathematics professor at the University of Chicago from 1945-1984. The lecture, entitled "Fun with Mathematics: Some Thoughts from Seven Decades", is a fascinating combination of personal anecdotes/memoir/autobiography and advice on how to do research and advice on how to be successful in general. The lecture is available from the Mathematical Sciences Research Institute site.

The four main pieces of advice that he outlines are:

I. Search the literature (he spends a lot of time on this topic and much of what he says has probably changed in the past few years with the introduction of Google Scholar)

II. Keep your notes (write a bound notebook and date your stuff)

III. Reach out (talk to people)

IV. Try to learn something new everyday

R. L. Moore During the lecture, Kaplansky mentions R. L. Moore and his teaching style. Moore discouraged literature search by his students. He wanted them to be able to come up with everything on their own, even if the work had already been done before. I did a quick Google search for Moore and found the main site at the University of Texas. The methodology is called Inquiry-Based Learning (IBL) or, sometimes, Discovery Learning. The concept is that the instructor guides the students to develop the concepts, solve the problems and create the proofs themselves.

During the lecture, Kaplansky mentions R. L. Moore and his teaching style. Moore discouraged literature search by his students. He wanted them to be able to come up with everything on their own, even if the work had already been done before. I did a quick Google search for Moore and found the main site at the University of Texas. The methodology is called Inquiry-Based Learning (IBL) or, sometimes, Discovery Learning. The concept is that the instructor guides the students to develop the concepts, solve the problems and create the proofs themselves.

Fascinating! I really like this innovative teaching concept and the way it ties the teacher and student together more closely than the typical lecture does. There's lots of material on this method on the UTexas site that I'm going to delve into. I think part of the reason I'm writing this blog is because I feel like if I can say something back in my own words, I didn't really learn and understand it in the first place. Or, as Richard Feynman is said to have said "What I cannot create, I do not understand."

A good background article on Moore and his method was published in 1977 in The American Mathematical Monthly (vol. 84, No 4, pp. 273-278) by F. Burton Jones. It's available on JSTOR via the Depaul online library.

My initial take on the Moore Method is that it works well in courses in which the main focus is development of a system of theorems, starting from a set of axioms - like geometry or topology. However, for branches of applied mathematics such as statistics, the implementation of the method will not be as straightforward. However, I've briefly seen an article (I think it was a PhD thesis) on applying the Moore Method to teaching calculus. If it can be used to teach calc, it can be used for stats!

Lucy Kaplansky

And the reason I was listening to the Kaplansky lecture - in fact, the only reason I had ever heard of Kaplansky - is because he is the father of one of my favorite singer/songwriters, Lucy Kaplansky. If you want to listen to some of her songs on YouTube, check out the playlist I put together. In addition to being a top-tier mathematician, Irving Kaplansky was also a musician and wrote a song about pi entitled A Song about Pi. I heard Lucy perform that song on a radio event that was recorded in Chicago this summer. It's very cool. I'm anxiously awaiting the release of the CD from that performance.

Posted by Eliezer at Sunday, January 27, 2008 0 comments

Tags: Fun stuff

Midterm study diversion (I)

If you need a break from studying for the midterm, think about this: Who Discovered Bayes's Theorem?

You think it's obvious, right? Bayes, of course. Well, it's not so obvious. In fact, Stephen M. Stigler, professor of statistics across town at University of Chicago, wrote an article by just that title back in 1983 in the respected journal The American Statistician. (vol 37, no 4, pp. 290-296) We have access to that journal through Depaul online library. I'm in the middle of reading it and I must say it's a very entertaining piece. Sort of a whodunit for the mathematically inclined.

If you haven't taken advantage of the Depaul online library system yet, I highly recommend that you learn how to use it. It's a great resource and, after all, you're paying for it.

Here's how you can get to this article:

1. Access the online library at: http://www.lib.depaul.edu/

2. Click the link for Journal & Newspaper Articles in the Research section of the page.

3. Enter "american statistician" in the search box on the left and click Search.

4. The results screen shows the 5 locations that we have this journal (1 in print and 4 online) and the years covered by each location.

5. Since we're looking for an article from 1983, the only location that has that year is the first online location: JSTOR Arts and Sciences II Collection. Click that link.

6. Now you'll need your Depaul Campus Connect ID to log in to access the article.

7. You're now in JSTOR for The American Statistician. Click the link to Search This Journal and then run an article title search on "Who Discovered Bayes's Theorem". The rest is self-explanatory. Feel free to email me or post a comment if you have trouble getting to this article.

BTW, I skimmed the TOC of that issue and saw an article that looked interesting: On the Effect of Class Size on the Evaluation of Lecturers' Performance by Prof. Ayala Cohen of Technion. I scanned the article. Looks interesting.

Posted by Eliezer at Sunday, January 27, 2008 0 comments

Tags: Fun stuff

Tuesday, January 15, 2008

Post lecture 2 research and notes

The talk about conditional probability reminded me of one of my favorite mathematical paradoxes - the so-called Monty Hall Problem. Warning: This may confuse you! Don't read any further unless you really like puzzles and paradoxes.

The Monty Hall Problem

There was once a TV game show called Let's Make A Deal which was hosted by Monty Hall. He played different games with the studio audience similar to the way Drew Carrey does in The Price is Right.

One of the games goes like this:

1. Monty presents to you three doors, labeled 1, 2 and 3. He tells you that there's a car behind one door (it's a big door) and goats behind the other two. You get to pick one door.

2. After you make your choice, Monty opens one of the other doors to reveal a goat. He then gives you the option to change your original choice to another door.

The question is: Would you switch?

I'll give you some time to ponder...

Time's up!

Intuitively, you would think that there's no real advantage to switching. At step 1, you had a 1 in 3 chance of picking the car. At step 2, you now have a 1 in 2 chance of picking the car. Your original choice is still no better than the other door. Both have a 50/50 chance.

I'm going to stop here for two reasons:

1. I have a meeting to go to.

2. I'm still not sure why the intuitive solution is incorrect.

In the meantime, I'll refer you to two web pages:

Wikipedia - Monty Hall Problem

Play the Monty Hall Problem online

Excerpt from The American Statistician

I hope to come back to this soon with some clarity.

...

Ok, I'm back with a fairly simple explanation (although not rigorous) that I think makes sense...

When you make your initial door selection, you may have chosen the car or goats:

Case 1: 1/3 chance of having chosen the car

Case 2: 2/3 chance of a goat.

In case 1, after Monty reveals a goat in another door, switching is bad since you were correct with your initial guess.

In case 2, after Monty reveals a goat, switching is good since your first guess was a goat and Monty's door was a goat and switching would get you to the car.

Therefore, there's 1/3 chance that switching is bad and 2/3 chance that switching is good. Therefore, you should switch!

Make sense?

I'd really like to work on explaining this in terms of the conditional probabilities that we learned in class.

Posted by Eliezer at Tuesday, January 15, 2008 0 comments

Tags: Fun stuff