Confidence Interval for Ŷ

Once we've calculated our regression coefficients, b0 and b1, we can estimate the value of Y at any given X with the formula:

Ŷ = b0 + b1X

This is known as a point estimate. It estimates Ŷ to a point. However, since it's just an estimate, it's logical to ask for a confidence interval around Ŷ.

In addition to constructing a confidence interval for an individual Ŷ, we can also construct a confidence interval for an average Ŷ at X.

The difference is best illustrated with an example. In site.mtw we have data on square footage of stores and their annual sales. In general, sales increase linearly with increasing square footage. We perform a regression analysis and determine the regression coefficients. Now we could ask two questions:

1. If I build a single new store with 4,000 square feet, what does the regression predict for its annual sales? The answer can be expressed as a confidence interval for an individual Ŷ, because we're making a prediction for an individual new store.

2. If I build 10 new stores, each with 4,000 square feet, what does the regression predict for the average annual sales of those stores? The answer to this question can be expressed as a confidence interval for an average Ŷ, since we're making a prediction about the average sales at many new stores.

Confidence Interval for Average Ŷ

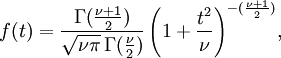

The confidence interval for the average Ŷ (question #2 above) takes the common form:

We are told that

Confidence Interval for Individual Ŷ

If we're constructing the confidence interval for an individual Ŷ (question #1 above), the calculations are very similar except that we use a 1+hi term in place of hi. So that term becomes:

Using Minitab to calculate the confidence interval

Here's how to get the info we need out of Minitab:

1. Load up your data in a worksheet. (We use the site.mtw file as usual.)

2. Select Stat-Regression-Regression from the menubar.

3. Put the independent variable (square feet) in the Predictor box. Put the dependent variable (annual sales) in the Response box.

4. Click the Options button. Enter 4 in the Prediction interval for new observations box. This tells Minitab that we want a prediction of annual sales at 4000 square feet (the units of our data are thousands of feet).

5. Check the Confidence Limit checkbox if you want a confidence interval for an average Y. Check the Prediction Limit checkbox if you want a confidence interval for an individual Y.

6. Click OK in the Options and the main Regression windows. The results appear in the session window. Here's the relevant information:

Predicted Values for New ObservationsThe prediction is 7.64 (the "fit"). The SE Fit term is the standard error for the average Y (the one with just hi, not 1+hi.

New

Obs Fit SE Fit 95% CI 95% PI

1 7.644 0.309 (6.971, 8.317) (5.433, 9.854)

I suspect that we'll probably be expected to construct the confidence interval for the average Y, given the Fit and SE Fit output. Don't forget: You still need to look up the t value (at n-2!) and multiply the SE Fit value by it.