This just in.... Midterm has craaaaaaaazy insurance claim problem!

This just in.... Midterm has craaaaaaaazy insurance claim problem!

I'll tell you what I got. I can't promise that it's right, but if you think I got it wrong, post a comment or shoot me an email. I'm doing this from memory, so if I'm not remembering the question correctly in some substantial way, let me know for sure.

Q. An insurance company determines that they receive an average on nine claims in an average week. Claims in any given week do not effect the chances of claims in other weeks.

For a given week:

A. What is the probability of receiving 7 claims.

B. Given that there was a claim, what is the probability that there were 7 claims.

Answer: Ok, this is clearly an application of the Poisson distribution.

For part A, just plug the numbers into the formula and you get

f(x=7) = (e^-9)(9^7)/7! = (0.0001234)(4782969)/5040 = 0.1171 = 11.71%

BTW, I found a handy-dandy Poisson distribution probability calculator online at Stattrek. I plugged in our numbers there and it calculated the same answer. Ok, that was the easy part. Now on to part B...

Part B tells us that there was at least one claim. We can calculate P(x>=1) = 1 - P(x=0). And P(x=0) = (e^-9)(9^0)/0! = e^-9 = 0.0001234. This makes sense intuitively because the probability of there being no claims at all when the average is 9 is pretty slim. Really slim, in fact.

So, P(x>=1) = 1-0.0001234 = 0.9998766. And we're being asked to determine P(x=7 | x>=1).

By definition of conditional probability:

P(x=7 | x>=1) = P( x=7 and x>=1) / P(x>=1)

We know the denominator. We calculated it to be 0.9998766.

To calculate the numerator (and though we really don't need to calculate anything, we'll try to be rigorous), we note that P(x=7) is clearly mutually exclusive from P(x=0) and therefore P(x=7 and x=0) = 0. And since x=0 and x>=0 are exhaustive of the sample space, we therefore know that P(x=7 and x>=0) = P(x=7). That's our numerator!

So, P(x=7 | x>=1) = P( x=7) / P(x>=1) = 0.1171 / 0.9998766 = 0.1171145 = 11.71145%

This is only slightly more than the probability in part A, but that makes sense since the condition in part B that there was at least one claim didn't significantly decrease the sample space.

That was the only really tricky question on the midterm, imho, although there were plenty of places that one could make a careless mistake. I noticed that most of the incorrect multiple choice answers were offered up to entice you if you made a quick mistake and didn't check your work.

Slightly tricky: Two mutually exclusive events are not independent. This is evident when you look at the test for independence:

P(A and B ) = P(A)P(B)

Well, if they events are mutually exclusive then P(A and B) = 0. Therefore it's impossible for them to be independent if both P(A) and P(B) are non-zero.

Answer to the bonus question: Snow and cold weather are independent since P(snow and cold) = P(snow)P(cold)

Thursday, January 31, 2008

The Midterm

Posted by Eliezer at Thursday, January 31, 2008 0 comments

Tags: Midterm

The best team in NFL history

Both the 1972 Miami Dolphins and the 2007 New England Patriots were undefeated in the regular season. Dr. Jonathan Sackner-Bernstein, an admitted Dolphins fan, has looked at the question of which was the better team. Since, of course, they could never play each other, we can't know with certainty. But we can analyze the two teams statistically and interpret the results.

You can read the article at theheart.org, but you'll need to go through the free registration process to get to the full text. I'll summarize what I understand here:

Sackner-Bernstein's analysis involves normalizing the data from the individual game results of both teams with data from their opposing teams. I believe the purpose here is to compare Miami and New England relative to how all the teams in the league are doing. In other words, when we look at points scored, if all teams are scoring more points in 2007 than in 1972 (for whatever reason), the normalization factor will scale down the New England number of points scored to be relative to the average points scored in 1972.

Another factor in the analysis is to assess the strength of both Miami and New England relative to the teams they faced. For example, if Miami beat their opponents by a wide margin and their opponents were all relatively good teams while New England beat their opponents by a thin margin and their opponents were relatively weak, we would conclude that Miami was the better team. In order to normalize for that factor, Sackner-Bernstein multiplies the statistics for each game by the season-end winning percentage of each opponent. For example, a 20-10 win over a team that had a winning percentage of .500 would count as 10-5, whereas a 27-21 win over a team that ended the season at .333, would count as 9-7.

The analysis also calculated stats on a game-by-game basis to determine if there was a trend in either team as performing better or worse over the course of the season.

Therefore, Sackner-Bernstein first gathered data on the outcomes of all the 14 Miami games and 16 New England games. Then he gathered data on all the other teams in the league. The four statistics that he gathered were:

1. points scored

2. points against

3. total yards offense

4. total yards defense

The points and yardage differentials were also considered.

You can see the tabulation of the results in the article. I'm interested in get hold of the mean values for the league and I've contacted Sackner-Bernstein's organization to try to obtain those data. They would make a great sample exercise. I'm also trying to understanding the p-value concept. I'll have to wait for chapter 9 (pg 286) to get into that.

Sackner-Bernstein concludes:

Thus, this analysis concludes that the regular season performances of the 1972 Miami Dolphins and the 2007 New England Patriots are statistically indistinguishable in terms of dominance over their opponents in each of their respective seasons. Under the assumption that the Patriots reign victorious in this year's Super Bowl, one can only conclude that the two teams are equally dominant and should be considered the two teams tied for the best single-season performances in the NFL's modern era.

Posted by Eliezer at Thursday, January 31, 2008 0 comments

Tags: Fun stuff

Wednesday, January 30, 2008

Midterm tip

In addition to bringing along a hard copy of the formula sheet, be sure to bring a calculator to the midterm. You'll probably want one with the ability to do xy so you can use it for the Binomial or Poisson distribution calculations.

Posted by Eliezer at Wednesday, January 30, 2008 0 comments

Tags: Midterm

The Birthday Problem

A relatively well-known exercise in probability is to determine the probability that two (or more) people share a birthday in a group of n people.

A relatively well-known exercise in probability is to determine the probability that two (or more) people share a birthday in a group of n people.

The answer is pretty surprising to most people. It turns out that in a group of 23 people, there's more than a 50% chance that two (or more) people have the same birthday.

The way to solve this problem is to first look at the reverse problem. I.e. what is the probability of everyone having different birthdays. Well, let's look at the people individually. The first person can have any birthday, so there are 365 possibilities for him. The second person can't have the same as the first one's bday, so there are 364 possibilities for the second person. The 363 for the third person and 362 for the fourth and so on.

Since the events (the selection of birthdays for each person) are independent, the number of successful outcomes is:

365 x 364 x 363 x ... x (365-n+1) where n is the number of people in the group

The total number of possible birthday combinations is 365^n.

P(23) = (365 x 364 x 363 x … x 343) / (365^23)

And the probability of two (or more) people having the same birthday is just the inverse:

P’(23) = 1 - P(23)

The calculation of P(23) is pretty ugly, but can be computed relatively accurately by most scientific calculators and personal computers. P(23) = 0.493, so P'(23) is 50.7%.

Taking it a step further

This is the point where most people stop, but a

1. Is there a way to simplify the formula, even with an approximation, that would make the computation quicker and easier?

2. At the same time, can we generalize the formula beyond the 365 possible birthdays and develop a way to calculate (or estimate) the probability of selecting n items all of different type from an infinite supply of items with x different types. Similarly, what is the probability of two items having the same type within a selection of n items.

It turns out that these questions have actually been studied quite a bit. In 1966, E. H. McKinney of Ball State University wrote a piece in American Mathematical Monthly (v. 73, No. 4, pp. 385-387) where he derived a formula for the problem of finding the smallest value of n such that the probability is great than or equal to 1/2 that at least r people have the same birthday.

McKinney did the computation for r = 2, 3 and 4 and reports the answers as 23, 88 and 187 respectively. What I really found interesting was the following note:

The author did not carry out the computation for r=5 since the machine time [on an IBM 7090 computer] for one computation of [the formula] for a given n was estimated at two hours.

I located the IBM history site on the 7090 Data Processing System. It's really fascinating and worth a read. But what really caught my eye was the bottom line:

The IBM 7090, which is manufactured at IBM's Poughkeepsie, N. Y. plant, sells for $2,898,000 and rents for $63,500 a month in a typical configuration.Wow! For almost $3 million, you got a computer that would take 2 hours to calculate what you could probably do in a fraction of a second today. I say "probably", because I don't totally understand McKinney's formula yet and I haven't implemented it. I'm still working on that part. :)

More on approximations of the birthday probability later...

Posted by Eliezer at Wednesday, January 30, 2008 0 comments

Tags: Fun stuff

Tuesday, January 29, 2008

Binomial or Poisson?

I found the following handy tip on Jonathan Deane's site (University of Surrey):

- If a mean or average probability of an event happening per unit time/per page/per mile cycled etc., is given, and you are asked to calculate a probability of n events happening in a given time/number of pages/number of miles cycled, then the Poisson Distribution is used.

- If, on the other hand, an exact probability of an event happening is given, or implied, in the question, and you are asked to caclulate the probability of this event happening k times out of n, then the Binomial Distribution must be used.

Posted by Eliezer at Tuesday, January 29, 2008 0 comments

Tags: Midterm

Sunday, January 27, 2008

Learning, teaching and the Moore method

Irving Kaplansky Tonight I listened to a recording of a lecture given by the late Irving Kaplansky, who was a mathematics professor at the University of Chicago from 1945-1984. The lecture, entitled "Fun with Mathematics: Some Thoughts from Seven Decades", is a fascinating combination of personal anecdotes/memoir/autobiography and advice on how to do research and advice on how to be successful in general. The lecture is available from the Mathematical Sciences Research Institute site.

Tonight I listened to a recording of a lecture given by the late Irving Kaplansky, who was a mathematics professor at the University of Chicago from 1945-1984. The lecture, entitled "Fun with Mathematics: Some Thoughts from Seven Decades", is a fascinating combination of personal anecdotes/memoir/autobiography and advice on how to do research and advice on how to be successful in general. The lecture is available from the Mathematical Sciences Research Institute site.

The four main pieces of advice that he outlines are:

I. Search the literature (he spends a lot of time on this topic and much of what he says has probably changed in the past few years with the introduction of Google Scholar)

II. Keep your notes (write a bound notebook and date your stuff)

III. Reach out (talk to people)

IV. Try to learn something new everyday

R. L. Moore During the lecture, Kaplansky mentions R. L. Moore and his teaching style. Moore discouraged literature search by his students. He wanted them to be able to come up with everything on their own, even if the work had already been done before. I did a quick Google search for Moore and found the main site at the University of Texas. The methodology is called Inquiry-Based Learning (IBL) or, sometimes, Discovery Learning. The concept is that the instructor guides the students to develop the concepts, solve the problems and create the proofs themselves.

During the lecture, Kaplansky mentions R. L. Moore and his teaching style. Moore discouraged literature search by his students. He wanted them to be able to come up with everything on their own, even if the work had already been done before. I did a quick Google search for Moore and found the main site at the University of Texas. The methodology is called Inquiry-Based Learning (IBL) or, sometimes, Discovery Learning. The concept is that the instructor guides the students to develop the concepts, solve the problems and create the proofs themselves.

Fascinating! I really like this innovative teaching concept and the way it ties the teacher and student together more closely than the typical lecture does. There's lots of material on this method on the UTexas site that I'm going to delve into. I think part of the reason I'm writing this blog is because I feel like if I can say something back in my own words, I didn't really learn and understand it in the first place. Or, as Richard Feynman is said to have said "What I cannot create, I do not understand."

A good background article on Moore and his method was published in 1977 in The American Mathematical Monthly (vol. 84, No 4, pp. 273-278) by F. Burton Jones. It's available on JSTOR via the Depaul online library.

My initial take on the Moore Method is that it works well in courses in which the main focus is development of a system of theorems, starting from a set of axioms - like geometry or topology. However, for branches of applied mathematics such as statistics, the implementation of the method will not be as straightforward. However, I've briefly seen an article (I think it was a PhD thesis) on applying the Moore Method to teaching calculus. If it can be used to teach calc, it can be used for stats!

Lucy Kaplansky

And the reason I was listening to the Kaplansky lecture - in fact, the only reason I had ever heard of Kaplansky - is because he is the father of one of my favorite singer/songwriters, Lucy Kaplansky. If you want to listen to some of her songs on YouTube, check out the playlist I put together. In addition to being a top-tier mathematician, Irving Kaplansky was also a musician and wrote a song about pi entitled A Song about Pi. I heard Lucy perform that song on a radio event that was recorded in Chicago this summer. It's very cool. I'm anxiously awaiting the release of the CD from that performance.

Posted by Eliezer at Sunday, January 27, 2008 0 comments

Tags: Fun stuff

Sample midterm questions - Part 2

"Regular" questions

Questions 1 and 2 - skip

Question 3: The average number of calls received by a switchboard in a 30-minute period is 15.Answer:

a. What is the probability that between 10:00 and 10:30 the switchboard will receive exactly 10 calls?

b. What is the probability that between 13:00 and 13:15 the switchboard will receive at least two calls?

Since this question deals with an "area of opportunity" (the 30-minute period), we obviously need to use Poisson.

For part a, we have a period (10-10:30) that is the standard 30-minute period. So,

f(x=10) = (e^-15)(15^10)/10!

Do the arithmetic or use a lookup table and you get:

f(x=10) = 0.048611

For part b, we have a 15 minute period, so the mean is 7.5 instead of 15. Also, to compute the right hand tail of f(x>=2) we take 1-f(x=0)-f(x=1).

f(x>=2) = 1 - f(x=0) - f(x=1)

= 1 - 0.0006 - 0.0041

f(x>=2)= 0.9953

Question 4: Four workers at a fast food restaurant pack the take-out chicken dinners. John packs 45% of the dinners but fails to include a salt packet 4% of the time. Mary packs 25% of the dinners but omits the salt 2% of the time. Sue packs 30% of the dinners but fails to include the salt 3% of the time. You have purchased a dinner and there is no salt.Answer:

a. Find the probability that John packed your dinner.

b. Find the probability that Mary packed your dinner.

This scenario is a perfect application of Bayes' Theroem. We have:

P(John) = .45

P(Mary) = .25

P(Sue) = .30

P(NoSalt|John) = .04

P(NoSalt|Mary) = .02

P(NoSalt|Sue) = .03

In part a, we want to know P(John|NoSalt). Applying Bayes':

P(John|NoSalt) = P(NoSalt|John)P(John) / P(NoSalt|John)P(John)+P(NoSalt|Mary)P(Mary)+P(NoSalt|Sue)P(Sue)

= (.04)(.45) / (.04)(.45) + (.02)(.25) + (.03)(.30)

= 0.018 / (0.018 + 0.005 + 0.009)

= 0.5625

In part b, we want to know P(Mary|NoSalt). Again, applying Bayes' in the same way:

P(Mary|NoSalt) = P(NoSalt|Mary)P(Mary) / P(NoSalt|John)P(John)+P(NoSalt|Mary)P(Mary)+P(NoSalt|Sue)P(Sue)

= (.02)(.25) / (.04)(45) + (.02)(.25) + (.03)(.30)

= 0.5 / (1.8 + 0.5 + 0.9)

= 0.15625

Question 5: In a southern state, it was revealed that 5% of all automobiles in the state did not pass inspection. Of the next ten automobiles entering the inspection station,Answer: Since this is a case of pass/fail, we recognize this as a case for the binomial distribution and, for part a, P(x>3) = 1-P(x=0)-P(x=1)-P(x=2)-P(x=3), so we'll have to calculate P(x) for x=0,1,2, and 3 using the formula:

a. what is the probability that more than three will not pass inspection?

b. Determine the mean and standard deviation for the number of cars not passing

inspection.

P(x) = [n!/(n-x)!x!] (p^x)(1-p)^(n-x) where n=10 and p=0.05

Sheesh! I hope we get a binomial distribution table if we get a question like this!

From table E.6 in the book, I get

P(x>3) = 1 - 0.5987 - 0.3151 - 0.0746 - 0.0105

P(x>3) = 0.0011

For part b, we use the easy formulas for mean and standard deviation of a binomial distribution:

Mean = np = (10)(0.05)

Mean = 0.5

Standard Deviation = SQRT(np(1-p)) = SQRT((0.5)(1-0.05)) = SQRT(0.475)

Standard Deviation = 0.689

Posted by Eliezer at Sunday, January 27, 2008 2 comments

Tags: Midterm

Sample midterm questions - Part 1

The midterm questions from last quarter's midterm have been posted with the answers. However, there's no explanation of the answers. I'll endeavor to explain how I think these answers are derived. If you think I got one wrong or if you have a better/easier way to get the answer, please let me know in a comment or email.

Since chapter 6 (normal distribution) is not included on our midterm, I'm going to skip those questions for now and focus on the questions that deal with chapters 1-5.

Question 1 & 2 - Skip

Question 3:Answer:Think of the classic example of a binomial experiment – flipping a coin. All the flips are identical. Each outcome has two possibilities, heads/tails. Each flip is independent of the previous flips. The probabilities do not change from one flip to the next. So C is not true.

Which of the following is not a property of a binomial experiment?

a. the experiment consists of a sequence of n identical trials

b. each outcome can be referred to as a success or a failure

c. the probabilities of the two outcomes can change from one trial to the next

d. the trials are independent

Exhibit 5-8: The random variable x is the number of occurrences of an event over an interval of ten minutes. It can be assumed that the probability of an occurrence is the same in any two time periods of an equal length. It is known that the mean number of occurrences in ten minutes is 5.3.

Question 4. Refer to Exhibit 5-8. The probability that there are 8 occurrences in 10 minutes isAnswer:

a. .0241

b. .0771

c. .1126

d. .9107

As soon as I see that we’re talking about events occurring over a period of time, I think “Poisson Distribution”. It’s the whole “area of opportunity concept. Therefore, apply the Poisson Formula (or use a lookup table) for X=8 and mean=5.3:

F(x=8) = (e^-5.3)(5.3^8)/8! = (0.00499)(622,596.9)/(40320) = 0.0771

So the answer is B.

Question 5: Refer to Exhibit 5-8. The probability that there are less than 3 occurrences isAnswer:

a. .0659

b. .0948

c. .1016

d. .1239

We apply the Poisson Distribution formula again, but this time to determine

F(x<3)= F(x=0) + F(x=1) + F(x=2)

You can apply the Poisson formula individually for these 3 values, but I prefer to look them up in the table and get:

F(x<3)= 0.0050 + 0.0265 + 0.0701 = 0.1016

So answer C is correct.

Exhibit 5-5

Probability Distribution

x f(x)

10 .2

20 .3

30 .4

40 .1

Question 6: Refer to Exhibit 5-5. The expected value of x equalsAnswer: Apply the formula for the expected value: multiply each value by its probability and add them up.

a. 24

b. 25

c. 30

d. 100

E(x) = (10)(0.2) + (20)(0.3) + (30)(0.4) + (40)(0.1) = 2 + 6 + 12 + 4 = 24< style="font-weight: bold;">

Question 7:Answer:

Refer to Exhibit 5-5. The variance of x equals

a. 9.165

b. 84

c. 85

d. 93.33

Just apply the standard formula for variance: sum the squares of the difference to the mean times the probability of each value. (Note: You don’t divide by the number of values as you would in a data sample of population. When you have a distribution, you multiply each discrete value by it’s probability.)

Var = (10-24)^2(.2) + (20-24)^2(.3) + (30-24)^2(.4) + (40-24)^2(.1)

= (196)(.2) + (16)(.3) + (36)(.4) + (256)(.1)

= 39.2 + 4.8 + 14.4 + 25.6

= 84

Correct answer is B.

Question 8: 20% of the students in a class of 100 are planning to go to graduate school. The standard deviation of this binomial distribution isAnswer: This is pretty straightforward, except that the formula sheet has the formula for variance. You need to remember to take the square root to get the standard deviation.

a. 20

b. 16

c. 4

d. 2

Std. Dev. = SQRT(np(1-p))

= SQRT ((100)(.20)(.80))

= SQRT (16)

= 4

Correct answer is C.

Questions 9, 10 and 11 - Skip

Question 12: Of five letters (A, B, C, D, and E), two letters are to be selected at random. How many possible selections are there?Answer: This question is actually rather vague. It doesn't tell us if the order of the selection matters. I.e. is (A,B) considered the same selection as (B,A)? From the answer key, it's pretty clear that they are considered the same, so we'll go with that.

a. 20

b. 7

c. 5!

d. 10

Apply the binomial coefficient formula:

nCx = n! / (n-x)!x!

= 5! / 3!2!

= 120 / 12

10

Correct answer is D.

BTW, this problem could easily be solved by inspection (i.e. by just looking at it): You're selecting 2 letters out of a pool of 5. For the first letter there are 5 possibilities, for the second letter there are 4 possibilities (since the first letter, whatever it was, is no longer in the pool). 5x4 = 20. Then divide by 2 because each two-letter pair has a duplicate in the reverse order. 20/2 = 10.

Question 13: If A and B are independent events with P(A) = 0.2 and P(B) = 0.6, then P(A U B) =Answer: This question requires us to use the Addition Law and the multiplication rule of independent events (both are on the formula sheet):

a. 0.62

b. 0.12

c. 0.60

d. 0.68

Addition Law gives us: P(A U B) = P(A) + P(B) - P(A intersect B)

Multiplication rule of independent events says: P(A intersect B) = P(A)P(B)

Therefore,

P(A U B) = P(A) + P(B) - P(A)P(B)

= (0.2) + (0.6) - (0.2)(0.6)

= (0.8) - (0.12)

= 0.68

Correct Answer is D.

Question 14: The variance of the sampleAnswer: This one is right out of the book. See page 82 where it says (in italics): neither the variance nor the standard deviation can ever be negative. But even if you somehow didn't remember that tidbit of information, you could just eyeball the formula for variance and see that the numerator is a squared quantity which is always positive and the denominator is the number of events in the sample, which is also always positive. So, Answer A is correct.

a. can never be negative

b. can be negative

c. cannot be zero

d. cannot be less than one

Incidentally, the variance can be zero if the data points are all equal to the mean, in which case the numerator is zero and therefore the variance is 0. It can also be less then one if the data points are all relatively close to the mean.

Question 15: The value of the sum of the deviations from the mean, must always beAnswer: This one is similar to the question above and it's also right out of the book, page 84, where it says this sum will always be zero. But again, if you missed that factoid, you could easily see that it's true by definition of the mean. Correct answer is D.

a. negative

b. positive

c. positive or negative depending on whether the mean is negative or positive

d. zero

Question 16: If A and B are independent events with P(A) = 0.38 and P(B) = 0.55, then P(A|B) =Answer: This is another tricky question. No formulas needed here. Just think about it: If A and B are independent, then by definition, the probability of A is not influenced by B. So the fact that B is "given", makes no difference - the probability of A is still 0.38. So P(A|B) = P(A) = 0.38. Answer D is correct.

a. 0.209

b. 0.000

c. 0.550

d. 0.38

Question 17: A six-sided die is tossed 3 times. The probability of observing three ones in a row isAnswer: Come on! Is this really graduate school?

a. 1/3

b. 1/6

c. 1/27

d. 1/216

3 independent events. Get the total probability by taking the product of the 3 events. Each one has a 1/6th probability of rolling a one. Therefore, total probability is (1/6)^3 = 1/216. Answer D is correct.

Question 18: If the coefficient of variation is 40% and the mean is 70, then the variance isAnswer: This one is a bit tricky in that you need to remember that the standard deviation is the square root of the variance. First, use the formula for Coefficient of Variation:

a. 28

b. 2800

c. 1.75

d. 784

CV = (StdDev / mean) x 100 %

rearrange to get:

Std Dev = CV x mean/100

= (40)(70)/100

= 28

Now since Var = StdDev^2, Var = 28^2 = 784

Answer D is correct.

Question 19: If two groups of numbers have the same mean, thenAnswer: Hmm. Does this one need explanation? If you understand mean, median, mode and standard deviation, you should understand that they're completely independent ways of describing data. I suppose someone could take the time to show examples of data sets with the same mean, but different median, mode and stddev, but I think it's pretty much intuitively obvious that Answer D is correct.

a. their standard deviations must also be equal

b. their medians must also be equal

c. their modes must also be equal

d. None of these alternatives is correct

Has anyone noticed that D is the correct answer for questions 11-19, except for 14? What's the probability of that happening?

Exhibit 3-3:

A researcher has collected this sample data. The mean of the sample is 5.

3 5 12 3 2

Question 20: Refer to Exhibit 3-3. The standard deviation isAnswer: Plug the numbers into the formula for standard deviation

a. 8.944

b. 4.062

c. 13.2

d. 16.5

StdDev = SQRT((3-5)^2+(5-5)^2+(12-5)^2+(3-5)^2+(2-5)^2)/(5-1))

= SQRT((4+0+49+4+9)/4) = SQRT(66/4) = SQRT(16.5)

= 4.062

Don't forget that last step of taking the square root! Otherwise you're giving the variance which, of course, is one of the choices (D). B is the correct answer. Answer C (13.2) is the variance if this data were for an entire population, in which case you would divide by N=5. Remember to divide by n-1 for a sample.

Posted by Eliezer at Sunday, January 27, 2008 0 comments

Tags: Midterm

Midterm study diversion (I)

If you need a break from studying for the midterm, think about this: Who Discovered Bayes's Theorem?

You think it's obvious, right? Bayes, of course. Well, it's not so obvious. In fact, Stephen M. Stigler, professor of statistics across town at University of Chicago, wrote an article by just that title back in 1983 in the respected journal The American Statistician. (vol 37, no 4, pp. 290-296) We have access to that journal through Depaul online library. I'm in the middle of reading it and I must say it's a very entertaining piece. Sort of a whodunit for the mathematically inclined.

If you haven't taken advantage of the Depaul online library system yet, I highly recommend that you learn how to use it. It's a great resource and, after all, you're paying for it.

Here's how you can get to this article:

1. Access the online library at: http://www.lib.depaul.edu/

2. Click the link for Journal & Newspaper Articles in the Research section of the page.

3. Enter "american statistician" in the search box on the left and click Search.

4. The results screen shows the 5 locations that we have this journal (1 in print and 4 online) and the years covered by each location.

5. Since we're looking for an article from 1983, the only location that has that year is the first online location: JSTOR Arts and Sciences II Collection. Click that link.

6. Now you'll need your Depaul Campus Connect ID to log in to access the article.

7. You're now in JSTOR for The American Statistician. Click the link to Search This Journal and then run an article title search on "Who Discovered Bayes's Theorem". The rest is self-explanatory. Feel free to email me or post a comment if you have trouble getting to this article.

BTW, I skimmed the TOC of that issue and saw an article that looked interesting: On the Effect of Class Size on the Evaluation of Lecturers' Performance by Prof. Ayala Cohen of Technion. I scanned the article. Looks interesting.

Posted by Eliezer at Sunday, January 27, 2008 0 comments

Tags: Fun stuff

Saturday, January 26, 2008

Lecture 4 - Ch 6 - The Standard Normal Distribution

Standardizing the Normal Distribution

As you can see from the formula for the Normal Distribution in the previous post, the normal distribution has two parameters - the mean (mu) and the standard deviation (sigma). Other than those parameters, it's just a (complicated) function of x.

You know what that means? We have to integrate it! OOOOhh boy!

Well, fortunately for us, some math majors have taken it upon themselves to integrate this formula for us and calculate the definite integral between 0 and almost any positive value. That means we can use these handy-dandy tables to find out the probability of x falling between 0 and any value.

Finding the probability of x falling between a and b

Looking up Pr(0 < x < a ) in the table is helpful, but often we want to know the probability of x falling between two values, a and b, or Pr (x > a) or Pr(x < a). We can easily use the values in the table to determine any of these probabilities.

Posted by Eliezer at Saturday, January 26, 2008 0 comments

Tags: Lecture Notes

Lecture 4 - Ch 6 - The Normal Distribution

The Normal Distribution

A typical continuous distribution is the Normal Distribution, which looks like the proverbial "bell-shaped" curve. However, just like bells can take many shapes, there are many different Normal Distributions. In fact, the Normal Distribution is really a family of distributions. Although all the curves in the family of Normal Distributions have certain common characteristics, a particular instance of the Normal Distribution is defined by its mean and standard deviation.

The Normal Distribution is defined generally by the function:

1. "standardize" the formula - called, oddly enough, the standard normal distribution

2. compute tables for the standardized formula

Posted by Eliezer at Saturday, January 26, 2008 0 comments

Tags: Lecture Notes

Lecture 4 - Ch 6 - Continuous Random Variables

Discrete vs. Continuous Random Variables

We now started learning about continuous random variables. These are random variables which can take (more or less) any value. Examples of these types of variables are the length of a piece of wire produced by a machine, the weight of a bag of groceries, the time it takes to print a page from a printer/copier, etc.

By contrast, discrete random variables have a limited (and usually finite) number of values - for example, the number of credits that a student has earned, the age of the employees of a company, the pass/fail results of an experiment such as flipping a coin, etc.

Posted by Eliezer at Saturday, January 26, 2008 0 comments

Tags: Lecture Notes

Thursday, January 24, 2008

Lecture 4 - Ch 6 - NOT on midterm

After spending about an hour going over the normal distribution and the standard normal distribution (notes will follow in a different blog entry), Lecture 4 was cut short tonight due to a fire alarm. When we returned to the room, the message below appeared on the board:

- Class Dismissed

- Ch. 6 not included!

Posted by Eliezer at Thursday, January 24, 2008 0 comments

Tags: Lecture Notes

Lecture 3 - Ch 5 - Poisson Distribution

The Poisson Distribution is derived from the binomial distribution and describes probabilities of success within a certain "area of opportunity". The area of opportunity could be a time interval, surface area, etc.

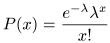

For a scenario which follows a Poisson Distribution, the probability of a x success events occuring in an area of opportunity is:

lambda = the average (mean) number success events which occur in that area of opportunity

The mean of a Poisson Distribution is lambda.

The standard deviation of a Poisson Distribution is SQRT(lamba).

Posted by Eliezer at Thursday, January 24, 2008 0 comments

Tags: Lecture Notes

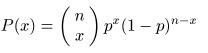

Lecture 3 - Ch 5 - Binomial Distribution

The binomial distribution is used to describe a discrete random variable under these conditions:

- An experiment is repeated several times - n

- Each "experiment" has two possible outcomes - success/failure, heads/tails, etc

- The probability of the two outcomes is constant across the experiments

- Each outcome is statistically independent of the others. Like when you flip a coin, the chances of heads on the 100th flip are 50/50 even if you had 99 tails in a rows before it. (Although if that really happened, I'd check the coin!)

where

wherex = the number of successes

n = the number of experiments/trials/observations

p = the probability of success in any single experiment/trial/observation

The mean of the binomial distribution is np.

The standard deviation of the binomial distribution is np(1-p).

Posted by Eliezer at Thursday, January 24, 2008 0 comments

Tags: Lecture Notes

Wednesday, January 23, 2008

Lecture 3 - Ch 5 - Discrete Random Variables

We calculated the mean, variance and standard deviation for a set of data earlier. Those calculations are made on a finite set of data points (observations).

If, rather than data, we have an event that is described theoretically by a discrete random variable with possible values and the probability of each value, we can make similar calculations. The difference here is that in a set of data each observation is equal to the next. But in a random variable, we weigh the possible values by their respective probabilities.

Mean - Add up all the possible values but instead of dividing by the number of values, we multiply each by its probability.

Posted by Eliezer at Wednesday, January 23, 2008 0 comments

Tags: Lecture Notes

Lecture 3 - Ch 4 - Counting Rules

These counting rules are basics from the GMAT, so it's mostly review. Getting these problems right is a matter of understanding the scenario and identifying how to apply the two simple formulas for permutations and combinations.

All of these cases involve selecting some number, call it x, of items from a pool of n possible items.

Permutations: Use this formula when order matters in the scenario. I.e. if (a,b)

There are more complicated examples involving replacement and other factors, but for our purposes, I'll leave it at this and perhaps bring those up in a side note blog entry.

For those interested in TeX/LaTeX using LEd and MiKTeX 2.7, apparently they don't support the \binom control sequence. If it did, I could just create the symbol above with:

\binom{n}{x}

Since it doesn't, the best I could do is to use an array and code it with:

\left(\begin{array}{c}n\\x\end{array}\right)

Yuck!

Posted by Eliezer at Wednesday, January 23, 2008 0 comments

Tags: Lecture Notes

Lecture 3 - CH 4 - Bayes' Theorem

Bayes' Theorem allows us to calculate P(B|A) when we don't have the usual information that would allow us to calculate it. I.e. we don't know P(B and A) or P(A).

We know P(B|A) = P(B and A) / P(A)

We'll derive alternative expressions for both the numerator and the denominator.

Since P(A|B) = P(A and B) / P(B) and P(A and B) = P(B and A), we can multiply both side by P(B) and rearrange the terms and get:

P(B and A) = P(A|B)P(B)

which becomes our numerator.

For the denominator, we note that

P(A) = P(A|B)P(B) + P(A|B')P(B')

It's worth thinking about this for a second. Time's up! What it means is that the total probability of A is equal to the conditional probability of A given B (times the probability of B) plus the conditional probability of A given B' (times the probability of B'). Since B and B' cover the entire sample space, the sum of those conditional probabilities (times their respective factors) equals the entire probability of A.

If there were more than two possibilities for the condition - for instance B1, B2, B3, ... - then the total probability of A would be the some of all three of the conditionals, i.e.:

P(A) = P(A|B1)P(B1) + P(A|B2)P(B2) + P(A|B3)P(B3) + ...

This is true as long as the events B1, B2, B3, ... are mutually exclusive and exhaustive of the sample space.

Now, back to our original formula that we were trying to evaluate:

Our new expression for P(B|A) becomes:

Posted by Eliezer at Wednesday, January 23, 2008 0 comments

Tags: Lecture Notes

Lecture 3 - Caveat

I was unable to attend Lecture 3 for personal reasons, so I'm relying heavily on the notes that were posted by Prof. Selcuk, the class notes of several people (thank you!), the material in the book and a meeting with Prof. Selcuk during office hours on Wednesday.

As always, use these notes at your own risk. I do my best to provide good class notes and additional material for thought, but always check with your own sources also. If you find any mistakes, I'd really appreciate you letting me know via comment or email.

Posted by Eliezer at Wednesday, January 23, 2008 0 comments

Tags: Lecture Notes

Wednesday, January 16, 2008

Excel Quartiles - Not so fast...

The formula that I posted for Quartiles in Excel (n+3/4) is not precise. Here's the algorithm for computing quartiles that Excel uses according to the Microsoft knowledge base:

The following is the algorithm used to calculate QUARTILE():

| 1. | Find the kth smallest member in the array of values, where: k=(quart/4)*(n-1))+1 If k is not an integer, truncate it but store the fractional portion (f) for use in step 3.And where:

| ||||||

| 2. | Find the smallest data point in the array of values that is greater than the kth smallest -- the (k+1)th smallest member. | ||||||

| 3. | Interpolate between the kth smallest and the (k+1)th smallest values: Output = a[k]+(f*(a[k+1]-a[k])) a[k] = the kth smallest a[k+1] = the k+1th smallest |

Posted by Eliezer at Wednesday, January 16, 2008 0 comments

Quartiles in HW1

Recall from a previous post that different software packages have different ways of calculating quartiles.

The formula for quartiles that we learned in class is:

Position of Q1 = (n+1)/4

Position of Q2 = (n+1)/2

Position of Q3 = 3(n+1)/4

Example: Let's say your data set is (2, 4, 6, 8, 10, 12, 14, 16)

n = 8

Position of Q1 = 9/4 = 2.25

We interpolate between the 2nd value (4) and the 3rd value (6) and get Q1 = 4.5

I haven't checked the documentation, but I've empirically determined that Minitab uses this same formula.

[EDIT] Before I go on to talk about Excel, I need to mention that the last step of interpolating between values is NOT how our book instructs us to calculate the quartile. Jeffrey McNamara pointed out to me that Rule 3 on page 77 says:If the result is neither a whole number nor a fractional half, you round the result to the nearest integer and select that ranked value.

So in our example, where the position of Q1 is 2.25, we would round that to 2 and select the 2nd ranked value which is 4.

Again, Minitab uses the interpolation method and, as Frank Cardulla used to say, "You pays your money, you takes your choice."

Kudos to Jeff for the catch!

[/EDIT]

Excel uses a different formula which is apparently:

Position of Q1 = (n+3)/4

In our example, this would mean:

Position of Q1 = 11/4 = 2.75

Interpolating .75 between the 2nd and 3rd value gives us Q1 = 5.5

This is indeed what MS Excel calculated when I used the Quartile function on this data set.

Conclusion: It seems to me to be a good idea to use [either the book method or] Minitab (or manual calculations) to calculate the quartiles for the homework.

Posted by Eliezer at Wednesday, January 16, 2008 0 comments

Tags: Homework

Using Minitab and Excel in the homework

Lowkeydad and I had the same question about the homework: Do we just use Minitab/Excel to make the calculations? Or do we need to do them manually (i.e. with a calculator) and/or show the formula that was used and/or any work?

I emailed the following question to Prof. Selcuk:

Just to be clear...His reply:

When we're asked to compute the mean, variance and standard deviation (in 3.27) and the covariance and coefficient of correlation (in 3.43) and all the calculations in 3.61 etc - is it sufficient to use Minitab/Excel to compute these statistics and simply report the results?

Yes, you don’t have to go thru each step in the formulas. Be careful with excel though, especially when the sample size is small. This may lead to a different result than in the answer key, and the TA may think that you’ve done something wrong and cut points when grading.

Posted by Eliezer at Wednesday, January 16, 2008 0 comments

Tuesday, January 15, 2008

Minitab

If you didn't get Minitab with your textbook, you can "rent" it for 6 months ($30) or a year ($50) or get a 30-day free trial version.

http://www.minitab.com/products/minitab/demo/default.aspx

** Props to Patrick Feeney for providing that tip **

The data files are provided in Excel format also, along with a set of templates for doing the calculations.

Posted by Eliezer at Tuesday, January 15, 2008 0 comments

Post lecture 2 research and notes

The talk about conditional probability reminded me of one of my favorite mathematical paradoxes - the so-called Monty Hall Problem. Warning: This may confuse you! Don't read any further unless you really like puzzles and paradoxes.

The Monty Hall Problem

There was once a TV game show called Let's Make A Deal which was hosted by Monty Hall. He played different games with the studio audience similar to the way Drew Carrey does in The Price is Right.

One of the games goes like this:

1. Monty presents to you three doors, labeled 1, 2 and 3. He tells you that there's a car behind one door (it's a big door) and goats behind the other two. You get to pick one door.

2. After you make your choice, Monty opens one of the other doors to reveal a goat. He then gives you the option to change your original choice to another door.

The question is: Would you switch?

I'll give you some time to ponder...

Time's up!

Intuitively, you would think that there's no real advantage to switching. At step 1, you had a 1 in 3 chance of picking the car. At step 2, you now have a 1 in 2 chance of picking the car. Your original choice is still no better than the other door. Both have a 50/50 chance.

I'm going to stop here for two reasons:

1. I have a meeting to go to.

2. I'm still not sure why the intuitive solution is incorrect.

In the meantime, I'll refer you to two web pages:

Wikipedia - Monty Hall Problem

Play the Monty Hall Problem online

Excerpt from The American Statistician

I hope to come back to this soon with some clarity.

...

Ok, I'm back with a fairly simple explanation (although not rigorous) that I think makes sense...

When you make your initial door selection, you may have chosen the car or goats:

Case 1: 1/3 chance of having chosen the car

Case 2: 2/3 chance of a goat.

In case 1, after Monty reveals a goat in another door, switching is bad since you were correct with your initial guess.

In case 2, after Monty reveals a goat, switching is good since your first guess was a goat and Monty's door was a goat and switching would get you to the car.

Therefore, there's 1/3 chance that switching is bad and 2/3 chance that switching is good. Therefore, you should switch!

Make sense?

I'd really like to work on explaining this in terms of the conditional probabilities that we learned in class.

Posted by Eliezer at Tuesday, January 15, 2008 0 comments

Tags: Fun stuff

Sunday, January 13, 2008

Homework 1 has been assigned

In case you didn't get it, homework #1 (due next Thursday) consists of the following exercises from the book:

1) 3.27 a, b and c

2) 3.35

3) 3.43

4) 3.61

5) 3.69

6) 4.11

7) 4.15

While we must maintain strict academic integrity, questions on the homework can be posted to comments. I'm moderating comments and I won't let anything through that would be questionable.

That said, I don't think there's any issue of integrity in explaining how to use Minitab or Excel to calculate the statistics, which may be a sticky point for first-time users. After all, it's a class in statistics, not in how to use the tools, right? Feel free to email me directly also.

Posted by Eliezer at Sunday, January 13, 2008 2 comments

Tags: Homework

Lecture 2 - Pop quiz #2

Prove: If A is independent of B, then B is independent of A. I.e. P(A|B) = P(A) implies P(B|A) = P(B).

Answer:

That's how I did it. If you have a different proof, I'd be interested in hearing it.

Posted by Eliezer at Sunday, January 13, 2008 0 comments

Tags: Lecture Notes, Quizzes

Lecture 2 - Ch 4 - Independency

A and B are independent events if and only if P(A|B) = P(A).

This is basically saying that A and B are independent only if the probability of A occurring given that B has occurred is the same probability as A happening in any case (even if B had not occurred). In other words, the probability of A occurring is not influenced by whether B happens or not. I.e. A is independent of B.

We also learned that if A is independent of B, then B is independent of A. Meaning,

P(A|B) = P(A) implies P(B|A) = P(B).

The proof the statement above was given as a pop quiz. See next post for the solution. (I know... you can't wait!)

The Multiplication Rule

Another check for independency can be derived as follows:

Since we know from the definition of conditional probability that

By multiplying both sides by P(B), we get: P(A|B)*P(B) = P(A/\B)

And we know that if the events are independent that P(A|B) = P(A), so substituting this on the left side of the equation, we get

The multiplication rule is more commonly used as a test of independence than the first definition, but we need to know and understand both definitions.

We went over an example of possible discrimination in promotions within the police department. That example is a good sample midterm question, so review it!

Posted by Eliezer at Sunday, January 13, 2008 0 comments

Tags: Lecture Notes

Lecture 2 - Ch 4 - Conditional Probability

Given two events, A & B:

the probability of A, given that B has occurred is denoted by P (A|B) and is calculated as:

Intuitively, we understand this as follows:

Look at the Venn diagram in the previous post. Once we know that B has occurred (since that's a given), B is our new sample space. And the only way for A to occur now is

Therefore, the probability of A occurring now is the probability of a successful outcome, i.e.

Posted by Eliezer at Sunday, January 13, 2008 0 comments

Tags: Lecture Notes

Friday, January 11, 2008

Lecture 2 - Ch 4 - Basic Probability

We covered basic probability concepts.

The probability of some event, A, is:

P(A) = # of favorable outcomes / total possible outcomes

If we consider two events, A & B, they can be represented by a Venn diagram:

The entire yellow area, S, is the sample space.

P(A U B) = P(A) + P(B) - P(A /\ B)

We subtract P(A /\ B) because that overlap area (dark red in our Venn Diagram) is double counted if we just add P(A) and P(B).

Posted by Eliezer at Friday, January 11, 2008 0 comments

Tags: Lecture Notes

Sample vs. Population

Use English letters for sample statistics and Greek letters for the analogous population stat.

Sample stats typically use (n-1) for the sample size while population stats use N. The reason for this difference is beyond the scope of this class (at least for now).

The exception is the mean where both use n (and N).

Posted by Eliezer at Friday, January 11, 2008 2 comments

More on covariance and correlation coefficient

So, what do the covariance and correlation coefficient mean?

In theory, the covariance is a measure of how closely the data resembles a linear relationship.

At a high level:

If covar(x,y) >> 0 then we can say there's a positive correlation. I.e., as x increases, y also increases.

If covar(x,y) << 0 then there's a negative correlation. I.e., as x increases, y decreases.

It was noted that the covariance isn't very useful because it's influenced by the magnitude of the values. For example, if the observations in both sets are all multiplied by 10 (which could happen if you simply change the units of measure from, say, meters to centimeters), the covariance becomes 100 times larger even though the actual correlation of the data has not changed at all.

Note: We didn't discuss why, on an intuitive level, the formula for the covariance is a measure of the linear correlation of the data sets. I'll leave that for further thought later on.

In any case, the covariance is standardized by dividing by the product of the standard deviations. (Again, why this works on an intuitive level is not clear to me. But it does!) Covariance is therefore a pretty useless statistic by itself, but it is part of the calculation of the correlation coefficient, which is very useful.

The range of values for the correlation coefficient is from -1 to 1. A 0 correlation coefficient indicates no correlation - either because the data are wildly scattered or because they are on a horizontal or vertical line (indicating that the data sets are independent). The closer the correlation coefficient is to 1 or -1, the stronger the linear relationship between the data sets.

Posted by Eliezer at Friday, January 11, 2008 0 comments

Using MiKTeX and LEd

Well, I got MiKTeX (an implementation of LaTeX) installed and LEd as an integrated editor/viewer. It was actually relatively painless. I only had one installation glitch which may have been caused by the fact that I installed LEd before MiKTeX.

I was unable to see my formatted text in the built-in DVI viewer in LEd. I checked the configuration options and fixed the problem by changing the "TeX Distribution" option from MiKTeX 2.4 to MiKTeX 2.6 (even though I'm actually using 2.7). In any case, that was the only problem I had.

Actually understanding how to code formulas in TeX is a different story. I haven't really found a really good tutorial yet, but David Wilkins's Getting Started with LaTeX has some good documentation, including examples.

Here's my first try at creating a somewhat complex formula from class:

Here's the LaTeX code that's used to create the formula:

\[ covariance (x,y) = \frac {\sum_{i=1}^{n} (x_i-\bar{x})(y_i-\bar{y})}{n-1} \]

Posted by Eliezer at Friday, January 11, 2008 2 comments

Lecture 2 - Ch 3 - Correlating two sets of data

In the first lecture, we talked briefly about the correlation of two sets of data in a scatter plot. That discussion described the correlation in a qualitative way. Here we define the measure of correlation more precisely with two measures: covariance and coefficient of correlation.

For two sets of data, x and y:

Covariance (x,y) - [ Sum (xi-xmean)*(yi-ymean) ] / (n-1)

Coefficient of Correlation, r = covar(x,y) / sx*sy

where sx and sy are the standard deviations of x and y

BTW, I see now that I'm going to need to make some graphics to display these formulas. I'm going to try to learn to use LaTeX for mathematical typesetting. I downloaded LEd, a LaTeX editor, today and I hope to start using it over the weekend. If anyone has experience with LaTeX and any editor, please let me know.

Posted by Eliezer at Friday, January 11, 2008 0 comments

Tags: Lecture Notes

Lecture 2 - Ch 3 - Shape of the Distribution

Although the skewness has a formal definition that we didn't discuss, we categorized the shape of a distribution as either symmetric, positive-skewed or negative-skewed.

Symmetric - mean = median

Positive-skewed - mean > median (think of this as having a longer tail on the right (positive) side of the distribution curve

Negative-skewed - mean < median (here there's a longer tail on the left (negative) side of the curve

A box-and-whiskers graph is a simple graphical summary of the skewness of the data.

Don't let this confuse you, but... Another measure of the shape of a distribution curve is the kurtosis. Sounds like a disease, doesn't it?

Posted by Eliezer at Friday, January 11, 2008 0 comments

Tags: Lecture Notes

Thursday, January 10, 2008

Lecture 2 - Ch 3 - Using Standard Deviation

Now that we understand how to calculate the standard deviation, we can define three statistical concepts that apply the standard deviation in practice. Those three concepts are the z-score, the empirical rule and Chebyshev's rule.

Z-score - The z-score of an observed value is the difference between the observed value and the mean, divided by the standard deviation. The z-score is used to express how many standard deviations an observation is away from the mean.

Empirical Rule - In a bell-shaped distribution:

1 standard deviation (in either direction from the mean) has about 68% of the data.

2 standard deviations have about 95% of the data.

3 standard deviations have about 99.7% of the data.

This "bell-shaped" distribution is called the standard normal distribution and we'll learn more about it in a later lecture/chapter.

Chebyshev Rule - In any distribution:

At least (1-1/z2)*100% of the values will be within z standard deviations.

A few interesting things were noted briefly about Chebyshev's Rule:

1. It works with any, yes any, shaped distribution. That's really amazing.

2. Outside of the z standard deviations, we don't know anything about the data. Specifically, it may not be symmetric, so we can't assume that the data outside the z std devs is evenly distributed between them.

3. Chebyshev's Rule doesn't tell us anything about data within 1 standard deviation.

Did you know? There's a crater on the moon named after Chebyshev. Source: USGS

Posted by Eliezer at Thursday, January 10, 2008 0 comments

Tags: Lecture Notes

New location

Class location has changed to Lewis 1509 to cut down on the noise from the El.

Posted by Eliezer at Thursday, January 10, 2008 0 comments

Tuesday, January 8, 2008

Lecture 1 - additional notes and research

Quartiles

I was surprised to find the following statement in the Quartile entry on Wikipedia (which Prof Selcuk confirmed): "There is no universal agreement on choosing the quartile values." There is a reference to an article in American Statistician by a couple Australian professors (Hyndman and Fan). (Abstract: http://www-personal.buseco.monash.edu.au/~hyndman/papers/quantile.htm)

I was able to retrieve this article from the JSTOR Arts and Sciences 2 database via the NEIU library.

I found an interesting write-up on Defining Quartiles at Ask Dr. Math. After showing why quartiles are simple in concept but complex in execution, Dr. Math describes 5 methods for defining quartiles: Tukey, M&M, M&S, Minitab and Excel.

After reading the Dr. Math article, I see that the algorithm for defining quartiles that we discussed in class is the Minitab method, using x*(n+1)/4 to find quartile position and linear interpolation of closest data points to handle non-integral positions.

MS Excel

I was also surprised to find out that the accuracy of MS Excel is in question and that McCullough and Wilson have been writing in Computational Statistics & Data Analysis on the issues with Excel's algorithms and how they have changed in subsequent versions of MS Excel since 97.

Web Resources

I found a nice site that covers some of the material from lecture 1 (measures of central tendency and measures of spread) and more. It's called Stats4Students. I'm going to create a links section in the sidebar and add it.

Posted by Eliezer at Tuesday, January 08, 2008 0 comments

Lecture 1 - Quiz

Given:

Variance = 25

Coefficient of Variation = 10%

Compute the mean.

Answer:

We know the relationship between the coefficient of variation, standard deviation and the mean:

CV=(stddev/mean) * 100

We also know that the standard deviation is simply the square root of the variance:

Stddev = sqroot(variance)

Therefore, combining these two formulas and plugging in our givens, we get:

10 = (sqroot(25) / mean) * 100

10 = (5/mean) * 100

10 = 500 / mean

10 * mean = 500

mean = 50

The only thing tricky about this question is that you have to remember that the CV is stddev/mean, not variance/mean. If you remember that, along with the relationship between the stddev and variance, it's simple.

Posted by Eliezer at Tuesday, January 08, 2008 0 comments

Tags: Lecture Notes, Quizzes

Lecture 1 - Ch 3 - Descriptive Statistics

We now discuss some basic concepts we can use to quantitatively describe a set of values. Rather than describing the set by painting a picture with a graph, chart or table as we did in the previous blog post, we paint the picture in a more precise and analytical way using numbers.

One of the most fundamental ways to describe a set of values is to identify the central tendency of the set. We recognize that when looking at some sample, the statistic that we're interested in won't have the same value for every member of the set. But we're still interested in the central value for the set as a whole. We commonly call this central value the "average". However, there are several ways to calculate this central value which differ slightly:

(Arithmetic) Mean - commonly called the average

Median - the middle value (or average of the two middle values if there are an even number of observations). The position of the median value is (n+1)/2.

Mode - the most common value in the data set

We also introduce the following definitions:

Range = maxvalue - minvalue

Quartile (Q1, Q2, Q3) which divides the data into 4 equal units in the same way the median divides it into 2 units. Again, if the number of values is not evenly divisible into 4 groups with the same number of values, take the average of the 2 closest values. Q2 is always the same as the median.

Position of Q1 = (n+1)/4

Position of Q2 = (n+1)/2

Position of Q3 = 3(n+1)/4

Check the data in those positions to get values for Q1, Q2 and Q3.

If Q1, Q2 and/or Q3 are not integers, interpolate between the closest data points to get a value for quartile.

Then a couple of statistical concepts to describe the dispersion (variation) of a set of values:

Range = maxvalue - minvalue

Interquartile Range = Q3-Q1. Use box-and-whiskers diagram to represent this graphically.

Variance - the sum of the square of the differences between the values and the mean, divided by n-1

Standard Deviation - square root of the variance

Coefficient of variation - (stddev / mean) * 100

A few questions came up about the Variance:

1. Why do we square the deviation from the mean when calculating the variance?

Answer: So that positive and negative values don't cancel each other out. Squaring ensures that we always have a positive value.

Follow-up question: Why not just take the absolute value?

Answer: Because we'll want to integrate this function and the absolute value function is not differentiable because it has a "kink" in it at 0 where the derivative is undefined.

2. Why do we divide by n-1 and not n?

Answer: If we were looking at the entire population, we would indeed divide by N. But with a sample, we divide by n-1. I'm still not so clear on the why that is.

Posted by Eliezer at Tuesday, January 08, 2008 0 comments

Tags: Lecture Notes

Lecture 1 - Ch 2 - Presentational Statistics

There are a number of different ways to graphically represent data, most of which are familiar to most people, but some may be new.

Familiar examples:

Summary table - categorize data and present in a table, often with percentages

Bar chart

Pie chart

This was new to me:

Stem-and-leaf diagram: It's used with numerical data. Take the first digit(s) and represent it/them as a single stem, the last digit is the leaf and may be repeated. In addition, a column is added to the left which indicates how many leaves are on each stem. It's kinda hard to describe the algorithm for constructing this diagram and it's best done with a demo.

The one thing that was unclear was why one of the counts was in parentheses. I looked this up in thee Minitab help file and found: "If the median value for the sample is included in a row, the count for that row is enclosed in parentheses." The row with the median is not necessarily the row with the most number of leaves.

Also somewhat new, but pretty much intuitively obvious:

Frequency Distribution and Histogram:

1. Sort your data

2. Determine the range

3. Select some number of classes into which you want to equally divide your data points

4. Compute the interval = range/# of classes (rounded up)

5. Determine the class boundaries based on the interval

6. Count the number of data points in each interval

Report the frequency and relative frequency (=freq/total # of data points) in a table

A Histogram is a bar chart (usually vertical) representation of the frequency of each class.

Some graphical ways to represent two variables:

Scatter Diagram - basically just an x-y plot of two variables

Time-Series Plot - plot of a single variable over time

Posted by Eliezer at Tuesday, January 08, 2008 0 comments

Tags: Lecture Notes

Lecture 1 - Ch 1 - Basic Definitions

Here are some basic definitions of terms used in the study of statistics:

When discussing statistics about a group, it's important to distinguish between the entire group and a subset of that group which we're able to look at in detail and then extrapolate from that detailed analysis of the sample to the larger group. The entire group is called the population. The subset which we're analyzing is the called the sample.

For example, we may want to know about the voting preferences among voters in the US. The population is all the people who are registered to vote in the upcoming election. Since we can't feasibly ask each and every one about their preferences, we ask a sample, perhaps just a few hundred or thousand voters, about their preferences and we extrapolate from the answers we get from the sample to the entire population.

Of course, you have to understand that this extrapolation involves a degree of uncertainty. Determining exactly what that uncertainty is will be the subject of a later lecture.

Population - the entire collection under discussion

Sample - a portion of the population selected for analysis

Parameter - a numerical measure that describes a characteristic of a population.

Statistic - a numerical measure that describes a characteristic of a sample.

Descriptive statistics - focuses on collection, summarizing and presenting a set of data.

Inferential statistics - uses sample data to draw conclusions about a population.

Categorical data - consist of categorical responses, such as yes/no, agree/disagree, Sunday/Monday/Tuesday..., etc.

Numerical data - consist of numerical responses. Numerical data can be either discrete or continuous (see below).

Discrete data - usually refers to integer responses, i.e. 0, 1, 2, 3, etc.

Continuous data - consist of responses that can be any numerical value.

Posted by Eliezer at Tuesday, January 08, 2008 0 comments

Tags: Lecture Notes

Monday, January 7, 2008

Lecture 1 - Introduction

Our first GSB 420 class was last Thursday night. We met our teacher, Cemil Selcuk, and found out that his first name is pronounced "je-mil". I think he also said that his last name is pronounced "sel-juk".

We'll have the Depaul standard:

4 lectures

Midterm (35%)

5 lectures

Final (45%)

The other 20% of the grade is:

Homework (15%)

>= 5 in-class pop quizzes (5%)

We'll be using Minitab in this class. I'm excited about that! I always like to learn new tools, especially powerful tools for number crunching. I would really have liked to get the student edition of SPSS, but I guess that's asking for too much. :) A few of the people in class didn't get Minitab included in their text. Bummer. They need to get it.

Surprisingly, math review (algebra and calculus) is going to be an optional, time-permitting lecture. I would have expected those topics at the very beginning of the first lecture, but I'm kinda glad that we're not even doing it. At least there are some expectations that we need to come in with a baseline of knowledge about the subject.

For anyone who needs the reviews, I found some good notes at Prof. Timothy Opiela's web site. He teaches this course frequently, but not this quarter. Here's a link to his site: Opiela GSB 420.

Posted by Eliezer at Monday, January 07, 2008 3 comments

Tags: Lecture Notes